publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2023

PerfectFit: Custom-Fit Garment Design in Augmented RealityAkihiro Kiuchi, Anran Qi, Eve Mingxiao Li, Dávid Maruscsák, Christian Sandor, and Takeo IgarashiIn SIGGRAPH Asia 2023 XR , Sydney, NSW, Australia, 2023

PerfectFit: Custom-Fit Garment Design in Augmented RealityAkihiro Kiuchi, Anran Qi, Eve Mingxiao Li, Dávid Maruscsák, Christian Sandor, and Takeo IgarashiIn SIGGRAPH Asia 2023 XR , Sydney, NSW, Australia, 2023Best XR Demo Award

The mass production of the garment under a standard size in the garment industry does not consider individual body shape differences, resulting in unfitted garments and severe overproduction. Unluckily, the traditional tailor-fitting process is time-consuming, labour-intensive and expensive. We present PerfectFit, an interactive AR garment design system for fitting garments based on individual body shapes. Our system simulates the virtual garment reacting realistically to the client’s body shape and motion, and displays stereoscopic images to the designer via the AR glass. This enables the designer to identify the garment fitting via the client’s real-time motion from any viewpoint. Additionally, our system provides an editing interface to the designer which allows he/she interactively explores the design space of the garment and adjusts the fitting. Our system then reflects the changes on the client’s body. For the exhibit, visitors will play the role of the designer and interact with a person who plays the role of the client. The visitor designer role wears the AR Glass and can view the garment fitting issue on the client’s body. The visitor can explore the garment design space freely, and the author will provide slight guidance to the visitor on where and how to edit the garment to achieve better fits when needed. Our system will give the visitor an immersive experience of customizing the garment like a tailor.

EnchantedBrush: Animating in Mixed Reality for Storytelling and CommunicationEve Mingxiao Li, Anran Qi, Mauricio Sousa, and Tovi GrossmanIn Graphics Interface , Victoria, British Columbia, Canada, 2023

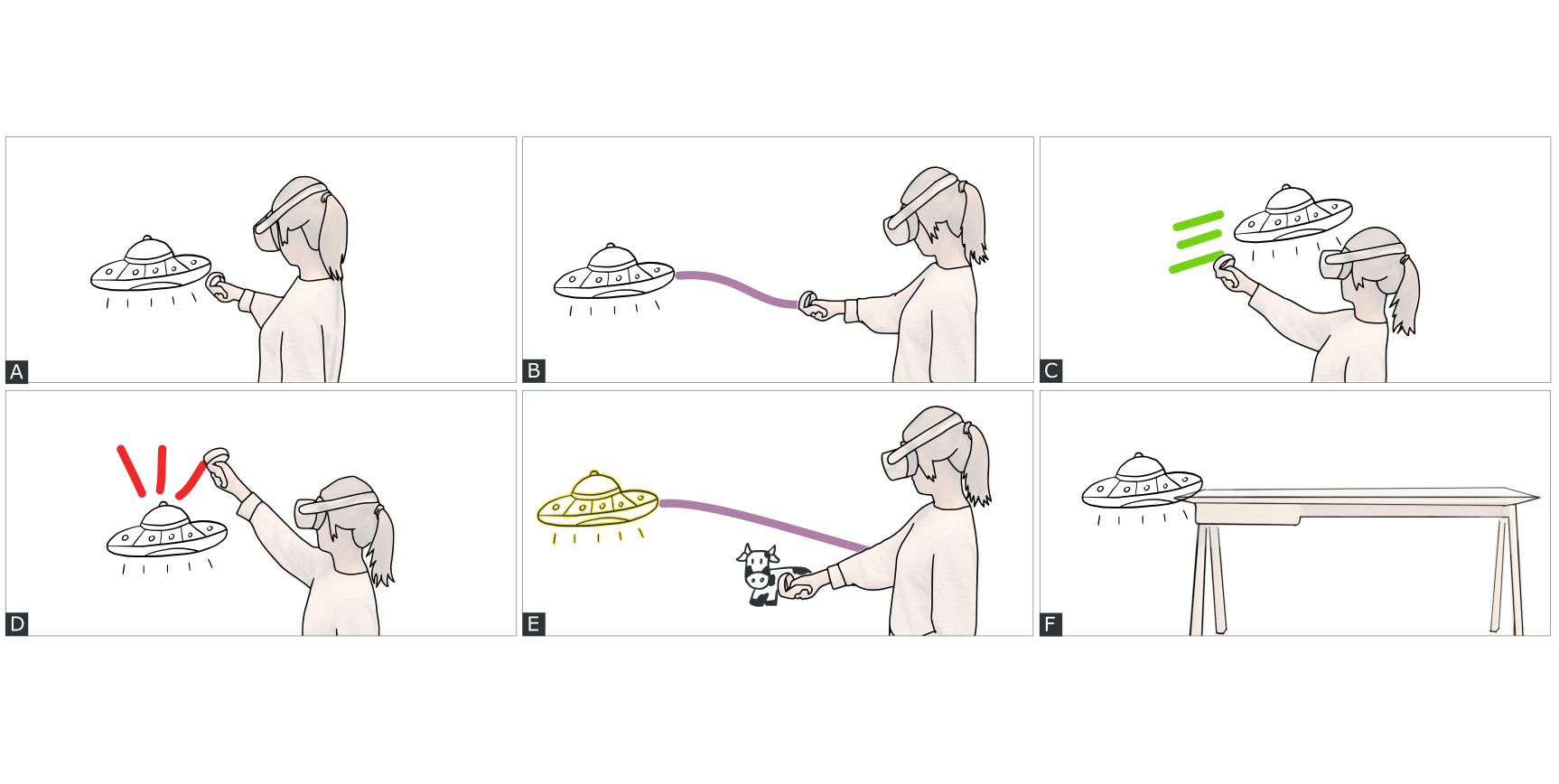

EnchantedBrush: Animating in Mixed Reality for Storytelling and CommunicationEve Mingxiao Li, Anran Qi, Mauricio Sousa, and Tovi GrossmanIn Graphics Interface , Victoria, British Columbia, Canada, 2023Recent progress in head-worn 3D displays has made mixed-reality storytelling, which allows digital art to interact with the physical surroundings, a new and promising medium to visualize ideas and bring sketches to life. While previous works have introduced dynamic sketching and animation in 3D spaces, visual and audio effects typically need to be manually specified. We present EnchantedBrush, a novel mixed-reality approach for creating animated storyboards with automatic motion and sound effects in real-world environments. People can create animations that interact with their physical surroundings using a set of interactive motion and sound brushes. We evaluated our approach with 12 participants, including professional artists. The results suggest that EnchantedBrush facilitates storytelling and communication, and utilizing the physical environment eases animation authoring and simplifies story creation.

2022

A Drone Video Clip Dataset and its Applications in Automated CinematographyAmirsaman Ashtari, Raehyuk Jung, Mingxiao Li, and Junyong NohIn Pacific Graphics , Kyoto, Japan, 2022

A Drone Video Clip Dataset and its Applications in Automated CinematographyAmirsaman Ashtari, Raehyuk Jung, Mingxiao Li, and Junyong NohIn Pacific Graphics , Kyoto, Japan, 2022Drones became popular video capturing tools. Drone videos in the wild are first captured and then edited by humans to contain aesthetically pleasing camera motions and scenes. Therefore, edited drone videos have extremely useful information for cinematography and for applications such as camera path planning to capture aesthetically pleasing shots. To design intelligent camera path planners, learning drone camera motions from these edited videos is essential. However, first, this requires to filter drone clips and extract their camera motions out of these edited videos that commonly contain both drone and non-drone content. Moreover, existing video search engines return the whole edited video as a semantic search result and cannot return only drone clips inside an edited video. To address this problem, we proposed the first approach that can automatically retrieve drone clips from an unlabeled video collection using high-level search queries, such as ”drone clips captured outdoor in daytime from rural places". The retrieved clips also contain camera motions, camera view, and 3D reconstruction of a scene that can help develop intelligent camera path planners. To train our approach, we needed numerous examples of edited drone videos. To this end, we introduced the first large-scale dataset composed of edited drone videos. This dataset is also used for training and validating our drone video filtering algorithm. Both quantitative and qualitative evaluations have confirmed the validity of our method.